Tag Medical History

By Editor Spencer J Weinreich I hasten to assure the reader that Bloodflower’s Melancholia is not contagious. It is not fatal. It is not, in fact, real. It is the creation of British novelist Tamar Yellin, her contribution to The… Continue Reading →

By Editor Spencer J. Weinreich It is seldom recalled that there were several “Great Plagues of London.” In scholarship and popular parlance alike, only the devastating epidemic of bubonic plague that struck the city in 1665 and lasted the better… Continue Reading →

By Editor Spencer J. Weinreich Four enormous, dead doctors were present at the opening of the 2017 Joint Atlantic Seminar in the History of Medicine. Convened in Johns Hopkins University’s Welch Medical Library, the room was dominated by a canvas… Continue Reading →

By guest contributor Lynn-Salammbô Zimmermann In the mid-14th century BCE, a group of young female singers contracted an unknown disease. A corpus of letters from Nippur, a religious and administrative center in the Middle Babylonian kingdom (modern-day Iraq), tells us about… Continue Reading →

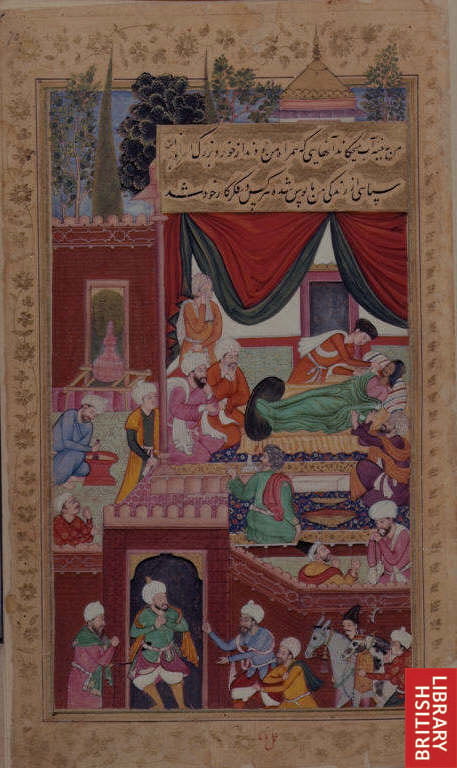

by guest contributor Deborah Schlein When Greek medical texts were transmitted and translated in the ʿAbbasid capital of Baghdad in the ninth and tenth centuries, they paved the way for original Arabic medical sources which built off Greek humoral theory… Continue Reading →

by contributing editor Carolyn Taratko In late May, President Obama laid a wreath at the Hiroshima Peace Memorial, making him the first sitting U.S. President to visit the city that was the target of the first atomic bomb on August… Continue Reading →

by guest contributor Elisabeth Brander Alchemy, and its association with the quest for the always-elusive philosopher’s stone, is one of the most fascinating aspects of early modern science. It was not only a tool to effect the transmutation of metals… Continue Reading →